Microsoft upgrades its self-developed AI chips to reduce dependence on NVIDIA, claiming to outperform Amazon's Trainium and surpass Google's TPU

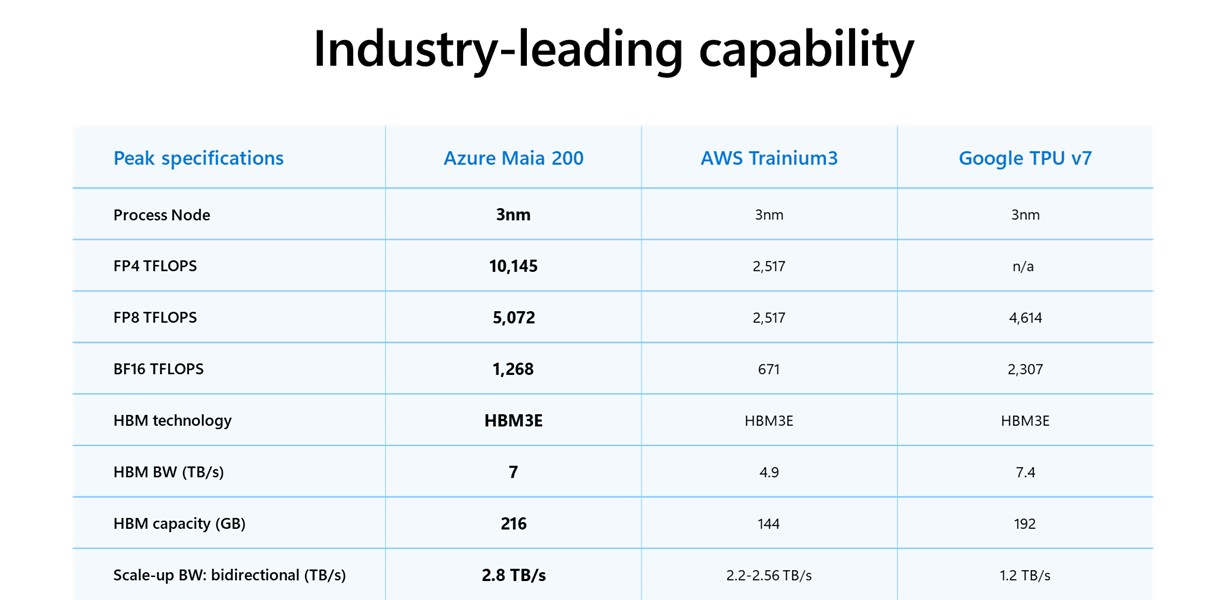

Maia 200 uses TSMC's 3-nanometer process and is Microsoft's most efficient inference system to date, "the highest-performing self-developed chip among all hyperscale cloud service providers," with a performance improvement of 30% per dollar compared to the latest hardware currently used by Microsoft. Its performance at FP4 precision is three times that of the third-generation Amazon Trainium chip, and its FP8 performance exceeds that of Google's seventh-generation TPU. It will support OpenAI's GPT-5.2 model. Microsoft has opened a preview version of the chip's software toolkit to developers and plans to offer cloud service rentals for the chip to more customers in the future, and is already designing the next-generation Maia 300

Microsoft released its second-generation self-developed artificial intelligence (AI) chip, Maia 200, on Monday, the 26th, Eastern Time. This is a core initiative for Microsoft to reduce its dependence on NVIDIA chips and drive its services more efficiently. The chip, manufactured using TSMC's 3-nanometer process, has begun deployment to data centers in Iowa and will subsequently move to the Phoenix area, marking a significant advancement for Microsoft in the field of self-developed chips.

Scott Guthrie, head of Microsoft's cloud and AI business, stated in a blog post that Maia 200 is "the most efficient inference system ever deployed by Microsoft," with a performance improvement of 30% per dollar compared to Microsoft's current latest generation hardware. These chips will first be supplied to Microsoft's superintelligent team to generate data for improving the next generation of AI models, while also providing computing power support for the enterprise-oriented Copilot assistant and AI services, including OpenAI's latest models.

According to Scott Guthrie, Maia 200 surpasses similar chips from Google and Amazon in certain performance metrics. The chip's performance at FP4 precision is three times that of Amazon's third-generation Trainium chip, while its FP8 performance exceeds that of Google's seventh-generation TPU. Microsoft has opened a preview version of the Maia 200 software development kit to developers, academia, and cutting-edge AI laboratories, and plans to "open the cloud service rental of this chip to more customers in the future."

This release highlights the fierce competition among tech giants for autonomy in AI computing power. In the context of tight supply and high costs of NVIDIA chips, Microsoft, Amazon, and Google are accelerating their self-developed chip processes, striving to provide cloud customers with lower-cost, more seamlessly integrated alternatives. Microsoft has stated that it is designing the Maia 300 follow-up product.

After the release of Maia 200, Microsoft's stock price, which had turned positive earlier in the session, expanded its gains to over 1% by the end of the early trading session, rising more than 1.6% near midday and closing up over 0.9%, marking three consecutive trading days of gains and refreshing its closing high in nearly two weeks.

Performance Parameters: Over 140 Billion Transistors of Inference Power

According to Scott Guthrie in Microsoft's official blog, Maia 200 is manufactured using TSMC's cutting-edge 3-nanometer process, with each chip containing over 140 billion transistors. The chip is custom-designed for large-scale AI workloads, providing over 10 petaFLOPS of computing power at 4-bit precision (FP4) and over 5 petaFLOPS of performance at 8-bit precision (FP8), all achieved within a chip power consumption range of 750 watts Guthrie emphasized in his blog that "in practical applications, a Maia 200 node can easily run today's largest models and leave ample room for even larger models in the future." The chip is equipped with 216GB of HBM3e memory, a bandwidth of 7 TB/s, and 272MB of on-chip SRAM, with a specially designed DMA engine and data transfer architecture to ensure that large-scale models can run quickly and efficiently.

At the system level, the Maia 200 adopts a dual-layer expansion network design based on standard Ethernet. Each accelerator provides 2.8 TB/s of bidirectional dedicated expansion bandwidth, enabling predictable high-performance collective operations in clusters of up to 6,144 accelerators. Four Maia accelerators within each tray are fully interconnected through direct, non-switched links, using a unified Maia AI transmission protocol for seamless scaling across nodes and racks.

Key Support for Cloud Business: From Copilot to OpenAI Models

The Maia 200 chip has become an important part of Microsoft's heterogeneous AI infrastructure, serving multiple models. Scott Guthrie's blog states that the next-generation AI accelerator gives Microsoft Azure an advantage in running AI models faster and more cost-effectively.

Guthrie disclosed that the chip will support OpenAI's latest GPT-5.2 model, providing a performance-to-price advantage for Microsoft Foundry and Microsoft 365 Copilot.

Microsoft's superintelligence team will use the Maia 200 for synthetic data generation and reinforcement learning to improve the next generation of internal models. Guthrie pointed out in his blog that "for synthetic data pipeline use cases, the unique design of the Maia 200 helps accelerate the generation and filtering of high-quality, domain-specific data, providing fresher and more targeted signals for downstream training."

The Microsoft 365 Copilot add-on service aimed at business productivity software suites and the Microsoft Foundry service for building applications based on AI models will both utilize this chip. As demand surges from generative AI model developers like Anthropic and OpenAI, as well as companies building AI agents and other products based on popular models, cloud service providers are striving to enhance computing power while controlling energy consumption.

The Maia 200 is currently deployed in Microsoft's central data center region near Des Moines, Iowa, and will subsequently move to the western U.S. region near Phoenix, Arizona, with plans for deployment in more regions in the future. Microsoft has invited developers, academia, and AI labs to start using the Maia software development kit beginning Monday, although it is unclear when Azure cloud service users will be able to access servers running the chip

Reducing Dependence on NVIDIA: The Chip Race Among Tech Giants

Microsoft's chip initiative started later than those of Amazon and Google, but all three companies share a similar goal: to create cost-effective machines that can seamlessly integrate into data centers, providing cloud customers with cost savings and other efficiency advantages. The high costs and supply shortages of NVIDIA's latest industry-leading chips have spurred competition to find alternative sources of computing power.

Google has its Tensor Processing Units (TPUs), which are not sold as chips but provide computing power through its cloud services. Amazon has launched its own AI accelerator chip, Trainium, with the latest version, Trainium 3, released last December. In each case, these self-developed chips can take on some of the computing tasks originally allocated to NVIDIA GPUs, thereby reducing overall hardware costs.

According to data disclosed by Scott Guthrie in a blog post, the Maia 200 significantly outperforms its competitors: its FP4 performance is three times that of the third-generation Amazon Trainium chip, and its FP8 performance exceeds that of Google's seventh-generation TPU. Each Maia 200 chip is equipped with high-bandwidth memory that surpasses AWS's third-generation Trainium AI chip or Google's seventh-generation Tensor Processing Unit (TPU). The performance per dollar of this chip is 30% better than the latest generation of hardware currently deployed by Microsoft.

Microsoft's Executive Vice President of Cloud and AI, Guthrie, referred to the Maia 200 as "the most powerful self-developed chip among all hyperscale cloud service providers." Notably, this chip uses Ethernet cables for connectivity instead of the InfiniBand standard—the latter being the standard used by the switches sold by NVIDIA after its acquisition of Mellanox in 2020.

Rapid Iteration: Maia 300 is in Design

Microsoft has stated that it is designing the successor to the Maia 200, the Maia 300. According to Scott Guthrie's blog post, Microsoft's Maia AI accelerator project is designed as a multi-generational iterative plan, "As we deploy Maia 200 in our global infrastructure, we are already designing for future generations of products, with each generation expected to continuously set new benchmarks for the most important AI workloads, providing better performance and efficiency."

It has been two years since the release of the previous generation product, Maia 100. In November 2023, when Microsoft released Maia 100, it never offered rental services to cloud customers. Guthrie stated in a blog post on Monday that for the new chip, "there will be broader customer availability in the future."

The core principle of Microsoft's chip development project is to validate the end-to-end system as much as possible before the final chip is available. A sophisticated pre-silicon environment guided the Maia 200 architecture from the earliest stages, simulating the computation and communication patterns of large language models with high fidelity. This early collaborative development environment allowed Microsoft to optimize the chip, network, and system software as a unified whole before the first batch of chips was produced. Thanks to these investments, the Maia 200 chip was able to run AI models within days of the first packaged parts arriving, reducing the time from the first chip to the first data center rack deployment to less than half that of comparable AI infrastructure projects If internal efforts encounter setbacks, Microsoft has other options: as part of its deal with close partner OpenAI, the company can obtain emerging chip designs from the maker of ChatGPT