Just now, Amazon's "AI turning point" has appeared?

Amazon AWS's Project Rainier core data center has been fully operational and is currently training the Claude large model for Anthropic. The system has deployed nearly 500,000 Trainium 2 chips (expected to double to 1 million by the end of the year), making it one of the largest AI training computers in the world. This indicates that Amazon's AI infrastructure expansion is shifting from strategic layout to capacity realization. Morgan Stanley expects AWS's revenue growth rates to reach 23% and 25% in the next two years, while Bank of America predicts that Anthropic alone could bring up to $6 billion in incremental revenue to AWS by 2026

With the official operation of its core data center, Amazon's AI infrastructure layout has reached a key milestone.

Just a few days ago, Amazon CEO Andy Jassy announced on social platform X:

The cornfield near South Bend, Indiana, has now become one of the largest AI computing clusters in the world—the core data center of Project Rainier. This system, jointly developed by AWS and AI unicorn Anthropic, deploys nearly 500,000 self-developed Trainium2 chips, which is 70% larger than any AI platform in AWS's history, and is now fully operational.

According to Jassy, the company's partner Anthropic is using this system to train and run its large model Claude, providing more than five times the computing power compared to its previous AI model training. It is expected that by the end of the year, the number of Trainium2 chips deployed in this system will double to 1 million.

This means that Amazon's AI infrastructure expansion is shifting from strategic layout to capacity realization, becoming an important turning point in its AI business development.

Morgan Stanley expects that AWS's revenue growth rate will reach 23% and 25% in the next two years, while Bank of America predicts that Anthropic alone could bring up to $6 billion in incremental revenue to AWS by 2026.

Supercomputing Cluster: Redefining the Scale of AI Infrastructure

The official launch of the Project Rainier system marks the beginning of AWS's large-scale AI capacity expansion.

This system is distributed across multiple data centers in the United States and connects tens of thousands of super servers through NeuronLink technology, aiming to minimize communication latency and enhance overall computing efficiency.

Equipped with nearly 500,000 Trainium2 chips, it has become one of the largest AI training computers in the world. Amazon plans to further expand its capacity by 1GW by the end of the year and increase the number of Trainium2 chips by approximately 500,000. More ambitiously, the company plans to double AWS's GW capacity by 2027.

AWS CEO Matt Garman previously emphasized that the performance of these self-developed chips can surpass general alternatives. Jassy stated in the earnings call: “The adoption rate of Trainium2 continues to rise, and current capacity is fully booked. This business is expanding rapidly.”

Self-Developed Chip Strategy Shows Initial Results

The core of Amazon's AI strategy is not the model, but the "computing power foundation"—that is, the self-developed chip system: The Trainium series (dedicated to AI training) and the Inferentia series (dedicated to inference) form AWS's "dual engines" in AI computing Now, this strategy is showing results.

The Trainium series of chips has now developed into a core business worth billions of dollars, with a quarter-on-quarter growth of 150%. This strategy not only helps reduce model training and inference costs but ultimately improves AWS's business profit margins.

Meanwhile, Amazon is preparing for the launch of Trainium3, which is expected to be released at the re:Invent conference at the end of this year, with larger-scale deployment in 2026. This next-generation chip not only enhances performance but, more importantly, will expand to a broader customer base, meaning AWS's AI services will move from "head customers" to a wider enterprise market.

Bank of America analyst Justin Post pointed out that the cost optimization effects brought by self-developed chips have already emerged: the adoption of Trainium has significantly reduced model training and inference costs, driving AWS's profit margin improvement and becoming a new billion-dollar growth engine.

Jassy previously revealed that the AI platform Bedrock being built by the company aims to become "the world's largest inference engine," with long-term development potential comparable to AWS's core computing service EC2. Currently, the vast majority of token usage on Bedrock is running on Trainium chips.

Morgan Stanley Upgrades Amazon Rating: AWS Enters an "AI Growth Acceleration Cycle"

Morgan Stanley, in its latest research report, has listed Amazon as a "Top Pick" and raised its target price from $300 to $315, indicating about a 25% upside from the current stock price.

The reason is simple—AWS is entering an "AI growth acceleration cycle." The analysts summarized four key growth drivers:

-

Rapid capacity expansion: An additional 1GW of computing power by the end of the year, with the number of Trainium2 chips doubling;

-

Structural expansion cycle: AWS plans to add another 10GW of data center capacity over the next 24 months;

-

Surge in AI orders: New orders in October exceeded the entire third quarter, with approximately $18 billion in new business in a single month;

-

Accelerated innovation: Trainium3 is expected to debut within the year, and the software platform Bedrock continues to expand.

The report pointed out that AWS is currently still in a "capacity-constrained" state—demand exceeding supply has become the core of the growth logic.

According to Morgan Stanley's analysis, the new business signed by AWS in October exceeded the entire third quarter, based on its backlog order analysis, indicating that Amazon may have signed approximately $18 billion in new business total in October.

"If it weren't for the computing power bottleneck, AWS's growth would be faster," the analysts wrote, "These expansion plans are paving the way for a re-acceleration in 2026 to 2027." Morgan Stanley expects that AWS's revenue growth rates will reach 23% and 25% in the next two years, with the explosion of AI demand expected to bring up to $6 billion in incremental revenue in 2026, driving an overall growth rate increase of about 4 percentage points.

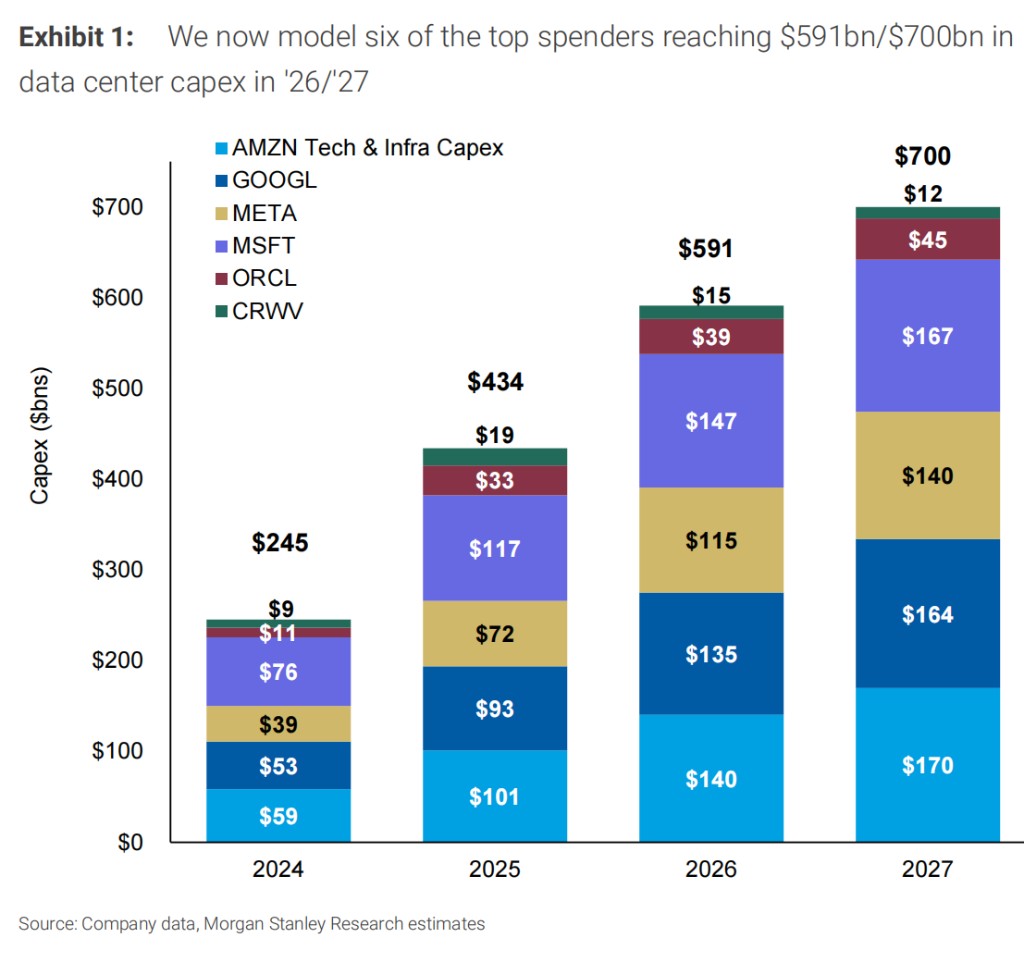

Morgan Stanley also raised Amazon's capital expenditure expectations for 2026/2027 by 13%/19%, now estimating total capital expenditures to be $169 billion/$202 billion. This means the company will invest $140 billion/$170 billion in technology and infrastructure, surpassing other giants like Microsoft, Meta, and Google.

Analysts believe that although Amazon is heavily investing to expand computing capacity, "once capacity becomes available, it is immediately absorbed," and this is still in a "very early stage, bringing unprecedented opportunities to AWS customers."