When Microsoft's "AI Faith" Collides with the Physical Boundaries of Data Centers: The "Computing Power Famine" Intensifies Amidst the AI Wave

Microsoft's data center supply and capacity constraints will continue until 2026, exceeding management's previous expectations. Multiple data centers in the United States are facing physical space and server shortages, affecting their ability to meet the demand for AI and cloud computing services. Global AI startups are raising record amounts of capital, highlighting the ongoing expansion of the AI computing power industry chain. Microsoft, Amazon, and Google are all facing similar limitations, with demand exceeding supply, particularly in core power equipment and AI chips. Microsoft's Azure cloud computing platform is restricting new subscriptions in several states, impacting the supply of high-performance AI servers

According to Zhitong Finance APP, the data center supply shortage crisis faced by American tech giant Microsoft (MSFT.US) is likely to last longer than previously anticipated by the company's management, highlighting the significant difficulties this cloud computing and office productivity software giant faces in its efforts to deploy AI and meet the surging demand for cloud computing services and AI computing power from global major clients.

The severe shortage of Microsoft’s data center supply and capacity may extend until 2026, exceeding the management's earlier prediction of "by the end of 2025." Recent statistics show that global AI startups, including OpenAI, have raised a record $192.7 billion, which largely indicates that the global AI "burn rate war" is still in full swing, underscoring that the "super bull market trajectory" of the global AI computing power industry chain is far from over.

The lack of long-term and continuously available high-performance AI server clusters for rental to clients, as well as traditional cloud computing servers, has been a concern for cloud computing giants in North America. Since the global popularity of ChatGPT in 2023, Microsoft, Amazon, and Google have all described similar constraints. Amazon AWS management clearly stated earlier this year that "demand exceeds supply," with main constraints in core power equipment, AI chips, and power-up progress; Google has raised its 2025 AI CapEx (AI capital expenditure) to about $85 billion and indicated that related expenditures will be even higher in 2026, focusing primarily on AI computing power infrastructure. Microsoft has been committed to balancing the massive computing power demands of its data center clientele.

However, the latest internal forecasts from Microsoft indicate that several core Azure cloud platform regions in the U.S. (such as Northern Virginia and Texas) will limit new Azure subscriptions until the first half of 2026, affecting resources for two types of server clusters: those based on high-performance AI GPUs and those based on data center CPUs.

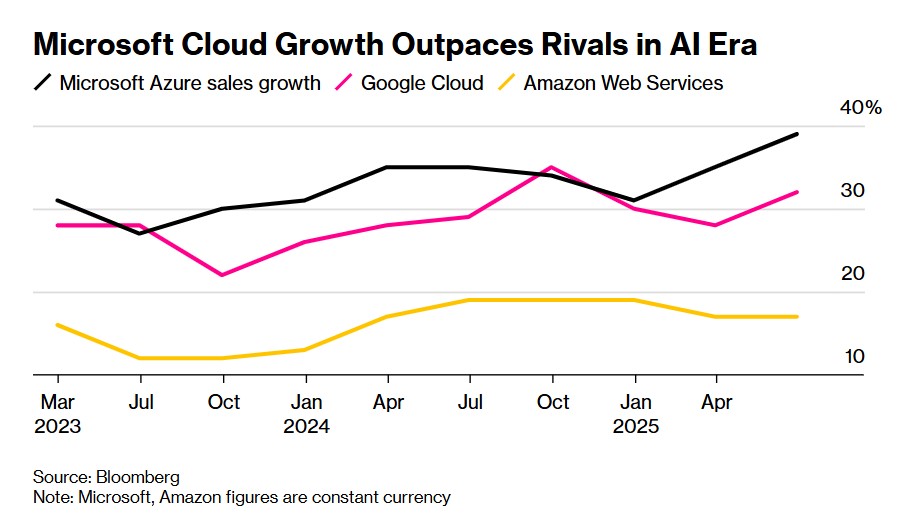

Currently, Microsoft’s acute data center supply shortage not only affects AI workload server clusters running NVIDIA's high-performance AI GPUs but also impacts traditional cloud computing service data centers primarily based on central processing units (CPUs). As Microsoft’s most important (if not the only) performance growth engine, the Azure cloud service platform is expected to generate over $75 billion in revenue in fiscal year 2025, with its cloud platform's business expansion speed surpassing that of major competitors Amazon AWS and Google Cloud.

While the supply and capacity of data centers are in acute shortage, the data center expansion wave led by global tech giants, including Microsoft, Amazon, and Google—focused on AI infrastructure investment and construction—is still in an extremely accelerated expansion process, driving the entire AI computing power industry chain to remain in the highest level of global prosperity. This is also why recent stock prices of AI computing power industry leaders such as NVIDIA, AMD, TSMC, Broadcom, Micron Technology, and SK Hynix have repeatedly hit historical highs.

Microsoft's Latest Forecast Indicates Data Center Supply Shortage May Last Until 2026

Media reports citing informed sources indicate that many large data center regions in the United States are experiencing severe shortages of physical space or physical server clusters. Informed sources who requested anonymity to discuss internal data forecasts stated that new subscriptions for Azure cloud computing services in some core areas, including key server hub regions like Northern Virginia and Texas, will be limited until the first half of next year.

This timeframe is longer than what the company's management previously indicated. In July, Microsoft Chief Financial Officer Amy Hood stated that the current data center capacity constraints would last until the end of 2025. Informed sources noted that the shortage of data center supply and capacity is affecting Microsoft's high-performance AI GPU server clusters used for cloud AI training/inference projects, as well as the traditional computing power dominated by data center central processing units (CPUs), which have long been the main chips for traditional cloud computing services.

Azure is currently Microsoft's most important growth engine—this cloud business is expected to exceed $75 billion in revenue in fiscal year 2025. Its cloud computing business is expanding at a pace that has significantly outstripped its largest competitors, Amazon.com Inc. and Google Cloud Platform under Alphabet Inc. As shown in the above image, Microsoft's Azure cloud computing platform continues to outperform major competitors Amazon and Google in performance growth during the AI era.

In recent years, the shortage of high-performance AI server clusters has been a recurring concern for cloud service providers. In the past six quarterly earnings calls, Microsoft management has stated that they are unable to meet all customer demands for traditional cloud computing and AI computing power. Amazon and Google have also described similar limitations.

A Microsoft spokesperson publicly stated that most Azure services and regions in the United States "have available capacity, allowing existing data center customers with deployed workloads to expand in an orderly manner." The spokesperson mentioned that when faced with unexpected surges in demand, the company would implement "supply and capacity protection methods" to balance customer demand within its data center clusters.

Azure customers often choose data center regions based on physical distance and available software. According to internal guidelines, when preferred facilities lack space, Microsoft sales personnel will guide customers to other regions with additional data center capacity. However, sources familiar with the work indicated that these workaround practices can increase the complexity of workloads and extend the time required for data to be transmitted between server farms and customers.

According to Apurva Kadakia, Head of Global Cloud and Partnerships at Hexaware Technologies, in some cases, customers encountering Azure capacity issues may shift their business elsewhere He stated that some customers may connect to multiple Azure regions in a short period or only migrate critical workloads to the cloud until more capacity becomes available.

"Our team regularly collaborates with large customers to plan around demand peaks (such as holidays), guiding them to choose the most suitable cloud computing regions and products," said a Microsoft spokesperson. "In exceptional cases where individual customers face rising costs or data transfer delays, Microsoft will compensate for their additional expenses."

Data center capacity continues to be tight, and the global AI computing power industry chain continues to soar?

Microsoft has been undergoing historic large-scale construction in recent years to bring larger data centers online—adding over 2 gigawatts of data center capacity in the past year, roughly equivalent to the power generation of the Hoover Dam.

"Since the official launch of ChatGPT and GPT-4, it has been nearly impossible to build data center capacity fast enough," said Microsoft Chief Technology Officer Kevin Scott in an interview in early October. He was referring to the popular AI chatbot and its underlying AI large language model, which is continuously updated by one of Microsoft's largest customers, OpenAI. "Even our most ambitious forecasts often prove to be far from sufficient."

The incredibly strong demand for cloud AI computing power driven by the AI wave continues to push the demand for a large number of new data centers. However, Microsoft is also facing extreme shortages in its traditional cloud computing infrastructure, which supports most applications and websites on the internet.

According to sources familiar with Microsoft's operations, OpenAI is also one of Microsoft's largest customers for these traditional cloud computing workloads based on data center CPUs.

Microsoft also uses a large amount of cloud computing resources to host its own workloads and applications, such as the Office suite. According to insiders, some Microsoft employees have been informed to shut down new internal projects in affected areas to save capacity.

From initial planning to the official launch of server clusters, the confirmation of data centers going live can take several years. Insiders stated that in the current global AI computing infrastructure construction process, several key components, including semiconductors and power supply infrastructure such as transformers, require long delivery times.

Sources said that for key customers looking to obtain additional cloud computing or AI computing capacity in tight Azure regions, Microsoft may make exceptions to provide support. The supply situation outside North America is relatively optimistic; for example, many of Microsoft's core cloud computing regions in Europe can accept new customers for cloud computing service subscriptions without restrictions.

During the earnings call in July, Microsoft's Chief Financial Officer Amy Hood stated that the ongoing supply and capacity shortages are due to the increasing demand for data centers driven by the explosive growth of AI computing power globally. She mentioned in the July call, "Oh my, I actually talked about this issue in January and said that I thought our supply-demand situation would be better by June. And now I hope it will be a bit better by December." Recently, the prices of high-performance storage products in the global DRAM and NAND series have surged, along with Oracle's recent contract reserves of $455 billion, which far exceeded market expectations, and Broadcom, the global AI ASIC chip "superpower," recently announced strong performance and future outlook, significantly strengthening the "long-term bull market narrative" for AI computing infrastructure sectors such as AI GPUs, ASICs, HBM, data center SSD storage systems, liquid cooling systems, and core power equipment. The AI computing demand driven by generative AI applications and AI agents at the inference end can be described as "starry sea," expected to drive the artificial intelligence computing infrastructure market to continue showing exponential growth. The "AI inference system" is also considered by Jensen Huang to be the largest source of future revenue for NVIDIA.

It is under the epic stock price surge of large tech giants such as NVIDIA, Meta, Google, Oracle, TSMC, and Broadcom, along with the continuously strong performance since the beginning of this year, that an unprecedented AI investment boom has swept through the U.S. stock market and global stock markets, driving the S&P 500 index and the global benchmark index—MSCI World Index to significantly rise since April, recently setting new historical highs.

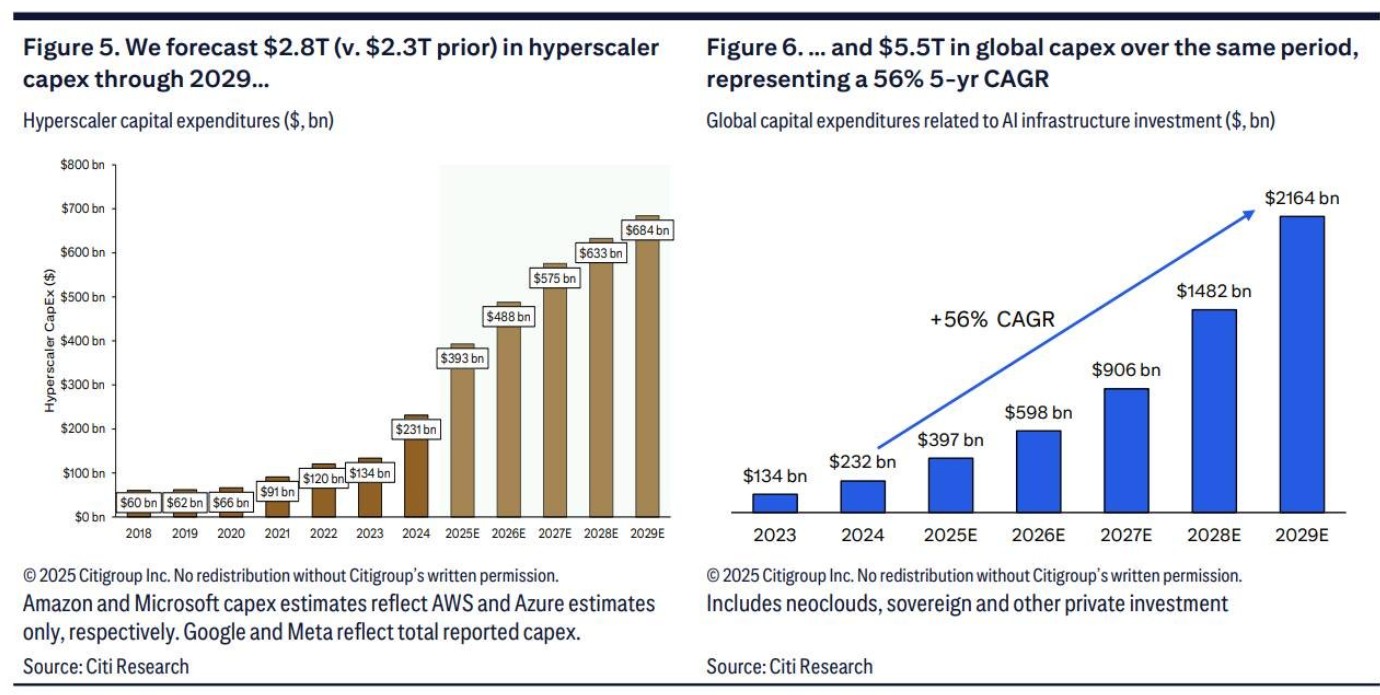

According to Wall Street financial giants Citigroup, Loop Capital, and Wedbush, the global artificial intelligence infrastructure investment wave centered on AI computing hardware is far from over, currently just at the beginning. Driven by an unprecedented "AI computing demand storm," this round of AI investment wave is expected to reach a scale of $2 trillion to $3 trillion. NVIDIA CEO Jensen Huang even predicts that by 2030, AI infrastructure spending will reach $3 trillion to $4 trillion, and the scale and scope of its projects will bring significant long-term growth opportunities for NVIDIA.

Recently, Citigroup's senior analysts have significantly raised their forecasts for AI infrastructure spending by the world's largest tech giants, including Microsoft, Google, Amazon, Meta, and SAP, increasing the 2026 AI infrastructure spending forecast from $420 billion to $490 billion. At the same time, Citigroup expects that the cumulative AI infrastructure spending forecast for tech giants by 2029 will also rise from $2.3 trillion to $2.8 trillion. Additionally, according to the calculations in this research report, the global demand for AI computing is expected to add 55 gigawatts of power capacity by 2030, which is anticipated to translate into up to $2.8 trillion in incremental AI computing-related spending, with the U.S. market accounting for $1.4 trillion