After a significant drop, the "AI infrastructure giants" welcome a left-side opportunity! DeepSeek sparks an efficiency revolution, but the demand for computing power is "endless."

After a sharp decline in the US stock market, investors are paying attention to "AI infrastructure giants," which may present a good opportunity for positioning. Trump's tariff policy has affected corporate confidence, leading to cautious investment sentiment towards US stocks, especially with technology stocks facing sell-offs. The Nasdaq index fell by 4%, and the market value of the seven major US tech companies evaporated by over $830 billion. Despite the challenges, the demand for AI computing power continues to grow

The recent series of tariff policies announced by Trump, who is returning to the White House, has severely impacted the confidence index of American businesses and consumers, leading global investors to adopt an increasingly cautious attitude towards the U.S. market. Since March, a growing number of hedge funds have been fleeing the U.S. stock market. In particular, the tariffs targeting major trading partners such as Canada, Mexico, and China have frightened the stock market, causing more and more investors to worry about the risks of the U.S. economy falling into "stagflation" or even "deep recession," which is the core logic behind the recent continuous decline of U.S. stocks.

On Monday, the wave of selling in the U.S. stock market continued, especially intensifying in technology stocks. Trump and U.S. Treasury Secretary Janet Yellen have recently not revealed any supportive or reassuring stance towards the U.S. stock market, but instead emphasized that as the U.S. economy moves away from dependence on government spending, it may inevitably experience a "detox" period. Trump himself has not directly denied the possibility of the U.S. being mired in economic recession, stating that the U.S. economy will have a "transition period," and that tariffs will inevitably bring "disturbances," emphasizing that tariffs are meant to make America prosperous again and "Make America Great Again."

Unsurprisingly, the sell-off in U.S. tech stocks has intensified, particularly affecting the Nasdaq Composite Index, which includes the world's top tech companies and has driven the U.S. stock market into a long-term bull market with "AI infrastructure stocks" since 2023, which fell 4% in a single day, marking the largest single-day drop since September 2022. The "Magnificent 7" — the seven giants of U.S. stocks (including Nvidia, Microsoft, Apple, Tesla, etc.) that hold significant weight in the S&P 500 and Nasdaq indices — all fell by at least 2%, with Tesla dropping over 15%, the largest decline since September 2020. As of Monday's market close, the total market value of the "Magnificent 7" evaporated by over $830 billion, setting a record for the highest single-day market value loss.

The Logic of the "Jevons Paradox" Continues to Ferment, AI Computing Power Demand Remains Vast

From the perspective of stock market investment, after the continuous decline of U.S. tech stocks, it may be time to consider left-side positioning — that is, to buy on dips those leaders in the "AI infrastructure construction field" that have led the entire U.S. stock market into a long-term bull market since 2023, including Nvidia, Broadcom, TSMC, and Vistra Corp, which have garnered the highest market attention since 2023.

Although stocks closely related to artificial intelligence, especially those in the AI infrastructure sector, have continued to weaken since 2025, and have been caught in a selling vortex due to rising expectations of "stagflation" and "deep recession" in the U.S. economy — after all, the continuous surge since 2023 has pushed these stocks' valuations to historical highs, **the valuations of these "AI infrastructure stocks" have significantly declined after experiencing a bubble-like sell-off wave. Wall Street investment institutions such as Morgan Stanley, BlackRock, and Bank of America generally hold a strong bullish stance on AI infrastructure stocks closely related to artificial intelligence (including AI GPU leader Nvidia, ASIC giant Broadcom, and power giant Vistra), optimistic that these companies' stock prices will show a "long-term bull market trajectory." **

DeepSeek-R1 has emerged, along with the recently released open-source foundational code that has a profound impact on AI training/inference, which can be said to have completely sparked an "efficiency revolution" in AI training and inference, pushing the future development of AI large models to focus on two core aspects: "low cost" and "high performance," rather than burning money in a "miracle through brute force" manner to train large AI models. However, it is important to note that the massive demand for AI inference computing power driven by the comprehensive catalysis of generative AI software, AI agents, and other AI application tools by DeepSeek means that the future prospects for demand in AI chip and other AI computing infrastructure fields will still be vast.

Wall Street financial giant Morgan Stanley (hereinafter referred to as "Morgans") stated in a recent research report that, benefiting from the rapid growth in demand for AI computing power and a significant increase in related infrastructure investment, the recent sharp correction in the stock market has made the valuations of key participants in the generative AI infrastructure value chain attractive, and these companies generally have very strong fundamentals, providing investors with a good entry point to position themselves in this core investment theme of AI.

The world's largest asset management giant BlackRock recently stated, that despite the high uncertainty regarding Trump’s policies, the theme of artificial intelligence and strong corporate earnings have led BlackRock to tactically continue to overweight U.S. stocks.

Microsoft CEO Satya Nadella previously mentioned the "Jevons Paradox"—when technological innovations significantly improve efficiency, resource consumption does not decrease but instead surges, which, when applied to the field of AI computing power, indicates an unprecedented demand for AI inference computing power driven by the explosive growth in the application scale of AI large models.

As Jensen Huang, CEO of NVIDIA, stated in the latest NVIDIA (NVDA.US) earnings conference call, the market demand for Blackwell architecture AI GPUs is continuously expanding, and the demand for AI computing power based on AI chips remains strong: “DeepSeek-R1 has ignited global enthusiasm, and the company is excited about the potential demand brought by AI inference. This is an outstanding innovation, but more importantly, it has open-sourced a world-class inference-end AI model. Models like OpenAI, Grok-3, and DeepSeek-R1 are super large models in the inference field that apply inference time scaling, and almost every AI developer is using R1 or thinking chains and reinforcement learning techniques to enhance their model's performance. Future inference large models will consume more than 100 times the computing power.”

As DeepSeek's highly anticipated DeepSeek R1 continues to sweep the globe, and the latest research from DeepSeek shows that the NSA mechanism achieves revolutionary training and inference efficiency for AI large models at the Transformer level, it has sparked a global following among AI large model developers for this "ultra-low-cost AI large model computing paradigm," **which will comprehensively drive the accelerated penetration of AI application software (especially generative AI software and AI agents) into various industries worldwide, fundamentally revolutionizing the efficiency of various business scenarios and significantly increasing sales. The demand for AI computing power driven by AI chips may exhibit exponential growth in the future, rather than the previously expected "DeepSeek shockwave" leading to a cliff-like decline in computing power demand **

The Wave of Spending on Large Data Centers Shows No Signs of Stopping! AI Infrastructure Giants Expected to See Continued Growth in Performance and Stock Prices

Morgan Stanley emphasized in its research report that, against the backdrop of exceptionally strong demand for generative AI and a sustained investment trend, fundamentally strong AI infrastructure stocks are likely to achieve significant excess returns over the next 12 months. As major U.S. tech giants like Meta, Amazon AWS, and Microsoft continue to invest heavily in the construction or expansion of large data centers, which are crucial for the efficient operation of generative AI applications like ChatGPT and DeepSeek, as well as the iterative updates of the underlying large models.

The institution's analysis team stated that the performance and valuation of AI infrastructure construction leaders most closely associated with data centers are expected to continue to expand significantly, with global hyperscale data center spending projects primarily focusing on core AI computing hardware such as AI GPUs and AI ASICs, as well as high-performance networking equipment and power infrastructure.

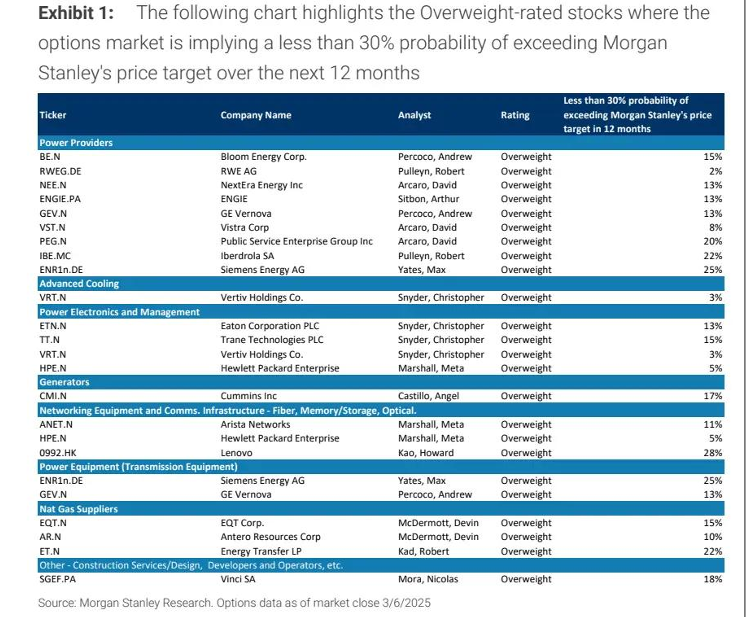

Morgan Stanley's report highlights the most important forces behind the global wave of data center construction or expansion—namely, the fundamentally strong giants in the AI infrastructure construction sector, which are mainly concentrated in core AI computing hardware, key power equipment, and both traditional and clean energy sectors. For example, leaders in AI GPUs like NVIDIA (NVDA.US), leaders in AI ASICs like Broadcom (AVGO.US), the U.S. integrated energy company operating power plants and providing retail electricity, Vistra Corp (VST.US), the electrical equipment and power management company with a presence in data center power management, Eaton (ETN.US), one of the largest natural gas producers in the U.S., EQT (EQT.US), the largest clean energy and utility company in the U.S., NextEra Energy (NEE.US), and the major cloud networking equipment supplier and leader in high-speed Ethernet switches, Arista Networks (ANET.US).

Morgan Stanley's analysis team stated that feedback from the Morgan Stanley-TMT conference reinforced the bullish logic for the entire AI infrastructure sector, noting that despite the emergence of novel algorithms like DeepSeek that enhance the overall efficiency of large model training and inference, large enterprises' investment enthusiasm in AI infrastructure remains unabated, with no signs of a slowdown in investment momentum focused on the demand side of AI computing. At the TMT conference, Microsoft executives indicated that they have the capacity to secure sufficient power and AI chip supply for new data centers in the short term, but the entire industry may face challenges in terms of skilled talent, computing resources, and power shortages in the long term; they pointed out that the industry needs to ensure sufficient AI chips, skilled engineering labor, and reliable energy supply to jointly build the necessary computing infrastructure over the next decade.

, AI computing power resources and electricity will become major bottlenecks. OpenAI pointed out that the world needs to massively expand wafer fab capacity, robotic infrastructure, and electrical facilities to support AI development, and proposed that "if controlled nuclear fusion succeeds, we will be worry-free."

Morgan Stanley expects that the power gap for data centers in the U.S. will exceed 40GW by 2028, which needs to be addressed through solutions such as natural gas power generation, fuel cells (like Bloom Energy's fuel technology), transforming Bitcoin mining sites, and supporting data centers for nuclear power plants.

Regarding the investment differences between "large model pre-training/fine-tuning" and "training/inference" stages, this report from Morgan Stanley provides a clear perspective. Overall, even after the AI large model training phase is completed, a significant amount of computing power resources will still be required in the subsequent fine-tuning optimization and inference deployment stages, especially the "thinking chain" mechanism in the AI large model inference phase significantly increases computing power consumption. Morgan Stanley stated that NVIDIA executives mentioned that as models introduce multimodal data (such as video, images, documents, charts, etc.) during pre-training to enhance intelligence levels, the scale and complexity of the models increase, leading to a continuous rise in computing power demand during the pre-training phase; more importantly, the computing power demand during the fine-tuning phase after model training will leap by an order of magnitude. During the fine-tuning process, model refinement and instruction fine-tuning are required to enhance the model's inference and reasoning capabilities, which will significantly increase the computational resources needed during inference.

Executives from Meta, the parent company of Facebook, also stated that whether it is the data combinations for pre-training (such as introducing video and synthetic data) or innovations in model architecture (such as the "Mixture-of-Experts" architecture), cutting-edge AI large model technology is rapidly evolving; and in the phase after model training is completed, in addition to human-involved fine-tuning, many new methods have emerged. These changes mean that continuous investment in flexible and energy-efficient infrastructure is essential: it must be able to adjust direction based on the latest research, and ensure that regardless of how much computing power is needed for pre-training, the invested resources can ultimately be fully utilized in various practical applications and can meet the continuously explosive growth of fine-tuning and inference computing power, which means that AI GPUs and AI ASICs need to be comprehensively integrated in the medium to long term. Overall, Morgan Stanley stated that the investment enthusiasm on the demand side for generative AI has not changed in any way, and there will be no slowdown in the short term. "Therefore, we still believe that many fundamentally strong companies in the AI infrastructure value chain have significant excess return potential. The stocks in these AI infrastructure construction areas have good fundamentals, and the recent weakness in stock prices is more influenced by overall market factors rather than a deterioration in company fundamentals." Driven by the super wave of generative AI, the demand outlook in areas such as power energy infrastructure, AI computing equipment, and data center network infrastructure remains positive.

Another major Wall Street investment institution, Bank of America, recently released a research report that supports Morgan Stanley's core viewpoint—that the performance and valuation of AI infrastructure leaders are expected to continue expanding under the wave of data center spending. "In a recent research report, Bank of America projected that capital expenditures for hyperscale data center operators will significantly increase on the already strong foundation of 2024, with a year-on-year growth of 34% expected in 2025, reaching $257 billion."

Global "hyperscale cloud service and cloud AI computing providers," including Amazon (AMZN.US), Microsoft (MSFT.US), and Facebook's parent company Meta (META.US), have been investing huge amounts of money in data center construction or expansion to meet the explosive growth in demand for AI training/inference computing power.

"Regarding the spending plan for 2025, Amazon's management expects it to reach $100 billion, and Amazon believes that the emergence of DeepSeek means that the demand for inference-side AI computing power will expand significantly in the future, thus increasing spending to support AI business development." CEO Andy Jassy stated, "We will not make purchases without seeing significant demand signals. When Amazon AWS expands its capital expenditures, especially in once-in-a-lifetime business opportunities like AI, I think this is a very good signal for the medium- to long-term development of the AWS business."